🏃♂️ 해당 글은 Tensorflow를 사용하기에 환경이 구축되어야 합니다.

Anaconda3 + tensorflow 키워드로 구글링해서 나오는 블로그들을 참고 바랍니다. :)

00. 목표

드럼 악보 이미지를 넣었을 때, 음표(해당 글에서는 음정만 구분)를 구분하는 모델을 만듭니다.

• Input : 악보 이미지

• Output : 음정

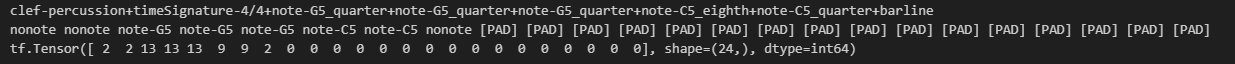

아래는 해당 글에서 사용할 데이터셋 샘플입니다. 각 마디에 대한 이미지와 라벨 데이터로 이루어져 있습니다.

드럼 데이터 셋

데이터셋은 아래와 같습니다.

특히, 라벨은 Alfaro가 단일음향 음악을 좌에서 우로 읽는 1차원 시퀀스로 나타내기 위해 제안한 형태입니다.

이 인코딩은 각 차례대로 나타나는 note와 symbol 사이에 '+' 기호를 추가하고, 코드의 개별 음표를 아래에서 위 순서대로 나열합니다. 그리고 음표가 동시에 나온 경우는 '|' 기호를 추가합니다.

해당 글에서는 음정만을 구분하기 때문에 non-note 음악 기호(clefs, key signatures, time signatures, and barlines)와 쉼표는 nonote로 구분합니다.

clef-percussion+note-F4_quarter|note-A5_quarter+note-C5_eighth|note-G5_eighth+note-G5_eighth+note-F4_eighth|note-G5_eighth+note-F4_eighth|note-G5_eighth+note-C5_eighth|note-G5_eighth+note-G5_eighth+barline라벨

(참고) 음정표

01. 배경

어떻게 학습하고 음표를 구분할 수 있는지 원리를 간단하게 살펴보겠습니다.

학습에는 3가지 알고리즘이 사용됩니다.

- CNN (Convolution Neural Network)

- RNN (Recurrent Neural Network)

- CTC Algorithm (Connectionist Temporal Classification)

CNN을 통해 이미지로부터 Feature Sequence를 추출합니다.

추출한 Feature Sequence들을 RNN의 Input으로 하여 이미지의 Text Sequence를 예측합니다.

이렇게 CNN + RNN 으로 이루어진 모델을 CRNN이라고 합니다.

그럼 Sequence Modeling에서 CRNN을 사용하는 이유는 무엇일까요?

CNN은 전체 이미지에서 특정 부분만 반영하기 때문에 전체 이미지에 대한 정보를 담을 수 없다는 한계가 있습니다.

이를 보완하기 위해 입력과 출력을 시퀀스 단위로 처리하는 RNN을 사용하여 정보를 종합해서 문자를 예측할 수 있도록 하는 것입니다.

CTC는 음성 인식과 문자 인식에서 사용되는 알고리즘입니다.

음성 혹은 이미지로부터 어디서부터 어디까지가 한 문자에 해당하는지 파악하는 것이 어렵기 때문에 관계 정렬을 위해 사용됩니다.

CTC는 이미지에 대해 임의로 분할된 각 영역마다의 특징에 대해 확률적으로 예측하게 됩니다.

P(Y|X) 즉, 주어진 X에 대해서 Y일 조건부 확률을 계산해 주게 됩니다.

위 그림에서 ϵ을 Blank Token이라고 부르는데, 문자 이미지가 없는 부분은 빈칸(Blank)으로 처리하고

각 단계별 예측된 중복 문자들을 합쳐서 최종 문자를 얻게 됩니다.

02. Library

필요한 라이브러리를 불러오겠습니다.

import glob

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from sklearn.model_selection import train_test_split

03. Data Load

이미지와 Label을 담은 리스트를 생성해주겠습니다.

x_dataset_path=f"{dataset_path}/measure/"

x_all_dataset_path = glob.glob(f"{x_dataset_path}/*")

x_file_list = [file for file in x_all_dataset_path if file.endswith(f".png")]

x_file_list.sort()

y_dataset_path=f"{dataset_path}/annotation/"

y_all_dataset_path = glob.glob(f"{y_dataset_path}/*")

y_file_list = [file for file in y_all_dataset_path if file.endswith(f".txt")]

y_file_list.sort()

images = x_file_list

labels = y_file_list

print("총 이미지 개수: ", len(images))

print("총 라벨 개수: ", len(labels))

배치 사이즈, 이미지 크기 등도 지정해줍니다.

# 배치 사이즈 지정

batch_size = 16

# 이미지 크기 지정

img_width = 256

img_height = 128

# 제일 긴 라벨 길이

max_length = 24

04. Data Pre-Processing

문자를 숫자로 encoding 하고, 숫자를 문자로 decoding 하기 위한 char_to_num과 num_to_char를 만들어주겠습니다.

우선 Pitch(음정)에 대해 구분하는 모델을 위해 필요한 vocabulary를 아래와 같이 정의하겠습니다.

char_to_int_mapping = [

"|", #1

"nonote",#2

"note-D4",#3

"note-E4",#4

"note-F4",#5

"note-G4",#6

"note-A4",#7

"note-B4",#8

"note-C5",#9

"note-D5",#10

"note-E5",#11

"note-F5",#12

"note-G5",#13

"note-A5",#14

"note-B5",#15

]

# 문자를 숫자로 변환

char_to_num = layers.StringLookup(

vocabulary=list(char_to_int_mapping), mask_token=None

)

# 숫자를 문자로 변환

num_to_char = layers.StringLookup(

vocabulary=char_to_num.get_vocabulary(), mask_token=None, invert=True

)

char_to_num.get_vocabulary() 으로 다음과 같이 지정된 vocabulary를 확인할 수 있습니다.

현재 라벨엔 음표, 쉼표, 마디선 등 모든 게 다 포함되어 있기 떄문에 이중에서 음표 정보만 가져와 vocabulary에 매핑되도록 처리하겠습니다.

# 각 token에 맞는 string list로 만들기

def map_pitch(note):

pitch_mapping = {

"note-D4": 1,

"note-E4": 2,

"note-F4": 3,

"note-G4": 4,

"note-A4": 5,

"note-B4": 6,

"note-C5": 7,

"note-D5": 8,

"note-E5": 9,

"note-F5": 10,

"note-G5": 11,

"note-A5": 12,

"note-B5": 13,

}

return "nonote" if note not in pitch_mapping else note

def map_rhythm(note):

duration_mapping = {

"[PAD]":0,

"+": 1,

"|": 2,

"barline": 3,

"clef-percussion": 4,

"note-eighth": 5,

"note-eighth.": 6,

"note-half": 7,

"note-half.": 8,

"note-quarter": 9,

"note-quarter.": 10,

"note-16th": 11,

"note-16th.": 12,

"note-whole": 13,

"note-whole.": 14,

"rest_eighth": 15,

"rest-eighth.": 16,

"rest_half": 17,

"rest_half.": 18,

"rest_quarter": 19,

"rest_quarter.": 20,

"rest_16th": 21,

"rest_16th.": 22,

"rest_whole": 23,

"rest_whole.": 24,

"timeSignature-4/4": 25

}

return note if note in duration_mapping else "<unk>"

def map_lift(note):

lift_mapping = {

"lift_null" : 1,

"lift_##" : 2,

"lift_#" : 3,

"lift_bb" : 4,

"lift_b" : 5,

"lift_N" : 6

}

return "nonote" if note not in lift_mapping else note

def symbol2pitch_rhythm_lift(symbol_lift, symbol_pitch, symbol_rhythm):

return map_lift(symbol_lift), map_pitch(symbol_pitch), map_rhythm(symbol_rhythm)

def note2pitch_rhythm_lift(note):

# note-G#3_eighth

note_split = note.split("_") # (note-G#3) (eighth)

note_pitch_lift = note_split[:1][0]

note_rhythm = note_split[1:][0]

rhythm=f"note-{note_rhythm}"

note_note, pitch_lift = note_pitch_lift.split("-") # (note) (G#3)

if len(pitch_lift)>2:

pitch = f"note-{pitch_lift[0]+pitch_lift[-1]}" # (G3)

lift = f"lift_{pitch_lift[1:-1]}"

else:

pitch = f"note-{pitch_lift}"

lift = f"lift_null"

return symbol2pitch_rhythm_lift(lift, pitch, rhythm)

def rest2pitch_rhythm_lift(rest):

# rest-quarter

return symbol2pitch_rhythm_lift("nonote", "nonote", rest)

def map_pitch2isnote(pitch_note):

group_notes = []

note_split = pitch_note.split("+")

for note_s in note_split:

if "nonote" in note_s:

group_notes.append("nonote")

elif "note-" in note_s:

group_notes.append("note")

return "+".join(group_notes)

def map_notes2pitch_rhythm_lift_note(note_list):

result_lift=[]

result_pitch=[]

result_rhythm=[]

result_note=[]

for notes in note_list:

group_lift = []

group_pitch = []

group_rhythm = []

group_notes_token_len=0

# 우선 +로 나누고, 안에 | 있는 지 확인해서 먼저 붙이기

# note-G#3_eighth + note-G3_eighth + note-G#3_eighth|note-G#3_eighth + rest-quarter

note_split = notes.split("+")

for note_s in note_split:

if "|" in note_s:

mapped_lift_chord = []

mapped_pitch_chord = []

mapped_rhythm_chord = []

# note-G#3_eighth|note-G#3_eighth

note_split_chord = note_s.split("|") # (note-G#3_eighth) (note-G#3_eighth)

for idx, note_s_c in enumerate(note_split_chord):

chord_lift, chord_pitch, chord_rhythm = note2pitch_rhythm_lift(note_s_c)

mapped_lift_chord.append(chord_lift)

mapped_pitch_chord.append(chord_pitch)

mapped_rhythm_chord.append(chord_rhythm)

# --> '|' 도 token이기 때문에 lift, pitch엔 nonote 추가해주기

if idx != len(note_split_chord)-1:

mapped_lift_chord.append("nonote")

# mapped_pitch_chord.append("nonote")

group_lift.append(" ".join(mapped_lift_chord))

group_pitch.append(" | ".join(mapped_pitch_chord))

group_rhythm.append(" | ".join(mapped_rhythm_chord))

# --> '|' 도 token이기 때문에 추가된 token 개수 더하기

# 동시에 친 걸 하나의 string으로 해버리는 거니까 주의하기

group_notes_token_len+=len(note_split_chord) + len(note_split_chord)-1

elif "note" in note_s:

if "_" in note_s:

# note-G#3_eighth

note2lift, note2pitch, note2rhythm = note2pitch_rhythm_lift(note_s)

group_lift.append(note2lift)

group_pitch.append(note2pitch)

group_rhythm.append(note2rhythm)

group_notes_token_len+=1

elif "rest" in note_s:

if "_" in note_s:

# rest_quarter

rest2lift, rest2pitch, rest2rhythm =rest2pitch_rhythm_lift(note_s)

group_lift.append(rest2lift)

group_pitch.append(rest2pitch)

group_rhythm.append(rest2rhythm)

group_notes_token_len+=1

else:

# clef-F4+keySignature-AM+timeSignature-12/8

symbol2lift, symbol2pitch, symbol2rhythm = symbol2pitch_rhythm_lift("nonote", "nonote", note_s)

group_lift.append(symbol2lift)

group_pitch.append(symbol2pitch)

group_rhythm.append(symbol2rhythm)

group_notes_token_len+=1

toks_len= group_notes_token_len

# lift, pitch

emb_lift= " ".join(group_lift)

emb_pitch= " ".join(group_pitch)

# rhythm

emb_rhythm= " ".join(group_rhythm)

# 뒤에 남은 건 패딩

if toks_len < max_length :

for _ in range(max_length - toks_len ):

emb_lift+=" [PAD]"

emb_pitch+=" [PAD]"

emb_rhythm+=" [PAD]"

result_lift.append(emb_lift)

result_pitch.append(emb_pitch)

result_rhythm.append(emb_rhythm)

result_note.append(map_pitch2isnote(emb_pitch))

return result_lift, result_pitch, result_rhythm, result_notedef read_txt_file(file_path):

# 텍스트 파일을 읽어서 내용을 리스트로 반환

with open(file_path, 'r', encoding='utf-8') as file:

content = file.readlines()

# 각 줄의 개행 문자 제거

content = [line.strip() for line in content]

return content[0]

contents = []

# 각 파일을 읽어서 내용을 리스트에 추가

for annotation_path in labels:

content = read_txt_file(annotation_path)

# 사이사이에 + 로 연결해주기

content=content.replace(" ","+")

content=content.replace("\t","+")

contents.append(content)

result_lift, result_pitch, result_rhythm, result_note = map_notes2pitch_rhythm_lift_note(contents)

labels=result_pitch

처리된 걸 확인해보겠습니다.

print(contents[0])

print(labels[0])

print(char_to_num(tf.strings.split(labels[0])))

sklearn의 train_test_split()을 이용해 Data를 Train Set과 Validation Set으로 나누어 각 변수에 저장합니다.

총 Dataset에서 90%를 Train Set으로 사용하고 10%를 Validation Set으로 지정해 주기 위해 test_size를 0.1로 설정했습니다.

x_train, x_valid, y_train, y_valid = train_test_split(np.array(images), np.array(labels), test_size=0.1)

마지막으로 Dataset 생성 시 적용될 encode_single_sample() 함수를 지정해줍니다. 이 함수를 이용해 데이터를 tensorflow에 적합한 형태로 변환시켜줄 수 있도록 합니다.

이미지는 바이너리 이미지로 변환되고, 위에서 지정한 크기에 맞게 resize됩니다. 이후, 이미지가 원래 가로로 긴 형태였는데 첫 음표부터 순차적으로 해석하길 원하기 때문에 위에서부터 아래로 이미지를 읽을 수 있도록 이미지의 가로 세로를 변환합니다.

라벨은 각 string마다 split하여 encoding되도록 합니다.

def encode_single_sample(img_path, label):

# 1. 이미지 불러오기

img = tf.io.read_file(img_path)

# 2. 이미지로 변환하고 grayscale로 변환

img = tf.io.decode_png(img, channels=1)

# 3. [0,255]의 정수 범위를 [0,1]의 실수 범위로 변환

img = tf.image.convert_image_dtype(img, tf.float32)

# 4. 이미지 resize

img = tf.image.resize(img, [img_height, img_width])

# 5. 이미지의 가로 세로 변환

img = tf.transpose(img, perm=[1, 0, 2])

# 6. 라벨 값의 문자를 숫자로 변환

label_r = char_to_num(tf.strings.split(label))

# 7. 딕셔너리 형태로 return

return {"image": img, "label": label_r}

05. Dataset 객체 생성

tf.data.Dataset을 이용하여 numpy array 혹은 tensor로부터 Dataset을 만들어주겠습니다.

위에서 정의한 encode_single_sample 함수를 정의하여 위에서 지정한 batch size로 train, validation Dataset을 만들어주겠습니다.

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

train_dataset = (

train_dataset.map(

encode_single_sample, num_parallel_calls=tf.data.AUTOTUNE

)

.batch(batch_size)

.prefetch(buffer_size=tf.data.AUTOTUNE)

)

validation_dataset = tf.data.Dataset.from_tensor_slices((x_valid, y_valid))

validation_dataset = (

validation_dataset.map(

encode_single_sample, num_parallel_calls=tf.data.AUTOTUNE

)

.batch(batch_size)

.prefetch(buffer_size=tf.data.AUTOTUNE)

)

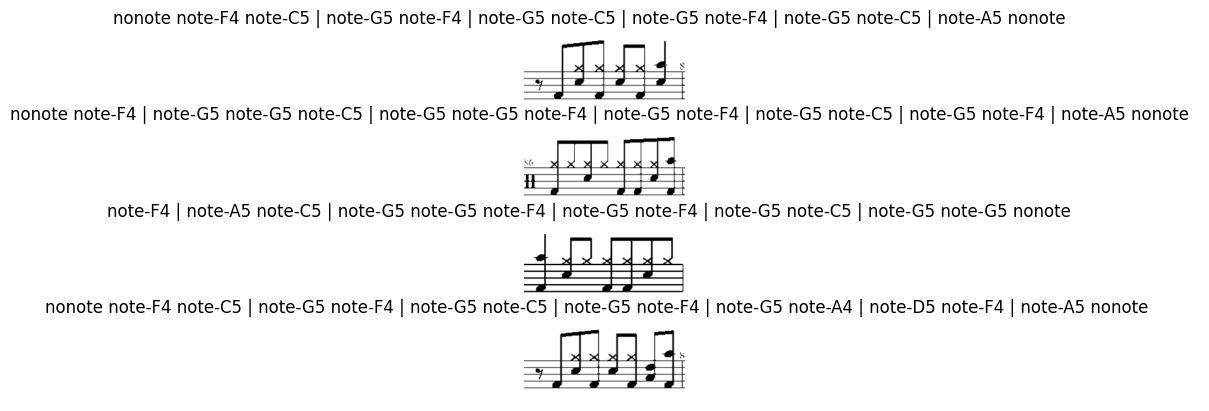

06. Data 시각화

만들어진 Dataset의 이미지와 Label을 확인해보겠습니다.

이미지는 가로와 세로를 변환했었는데, 아래 코드에서는 imshow(img[:, :, 0].T에서 T를 사용해서 이미지를 다시 Transpose 하여 보기 쉽게 출력해 주었습니다.

_, ax = plt.subplots(4, 1)

for batch in train_dataset.take(1):

images = batch["image"]

labels = batch["label"]

for i in range(4):

img = (images[i] * 255).numpy().astype("uint8")

label = tf.strings.join(num_to_char(labels[i]), separator=' ').numpy().decode("utf-8").replace('[UNK]', '')

print(labels[i])

ax[i].imshow(img[:, :, 0].T, cmap="gray")

ax[i].set_title(label)

ax[i].axis("off")

plt.show()

07. Model

CTC Loss를 구하기 위한 CTC Layer 클래스를 구현하겠습니다.

CTC Loss는 keras.backend.ctc_batch_cost를 통해 구현할 수 있습니다.

class CTCLayer(layers.Layer):

def __init__(self, name=None):

super().__init__(name=name)

self.loss_fn = keras.backend.ctc_batch_cost

def call(self, y_true, y_pred):

batch_len = tf.cast(tf.shape(y_true)[0], dtype="int64")

input_length = tf.cast(tf.shape(y_pred)[1], dtype="int64")

label_length = tf.cast(tf.shape(y_true)[1], dtype="int64")

input_length = input_length * tf.ones(shape=(batch_len, 1), dtype="int64")

label_length = label_length * tf.ones(shape=(batch_len, 1), dtype="int64")

loss = self.loss_fn(y_true, y_pred, input_length, label_length)

self.add_loss(loss)

return y_pred

CRNN 모델을 구현하겠습니다. 2개의 Convolution block과 2개의 LSTM 모델이 결합한 모델입니다.

학습에 사용되는 loss는 위에서 지정한 CTC Layer의 loss를 이용해서 학습하도록 설정해 줍니다.

def build_model():

# Inputs

input_img = layers.Input(

shape=(img_width, img_height, 1), name="image", dtype="float32"

)

labels = layers.Input(name="label", shape=(None,), dtype="float32")

# 첫번째 convolution block

x = layers.Conv2D(

32,

(3, 3),

activation="relu",

kernel_initializer="he_normal",

padding="same",

name="Conv1",

)(input_img)

x = layers.MaxPooling2D((2, 2), name="pool1")(x)

# 두번째 convolution block

x = layers.Conv2D(

64,

(3, 3),

activation="relu",

kernel_initializer="he_normal",

padding="same",

name="Conv2",

)(x)

x = layers.MaxPooling2D((2, 2), name="pool2")(x)

# 앞에 2개의 convolution block에서 maxpooling(2,2)을 총 2번 사용

# feature map의 크기는 1/4로 downsampling

# 마지막 layer의 filter 수는 64개 다음 RNN에 넣기 전에 reshape

new_shape = ((img_width // 4), (img_height // 4) * 64)

x = layers.Reshape(target_shape=new_shape, name="reshape")(x)

x = layers.Dense(64, activation="relu", name="dense1")(x)

x = layers.Dropout(0.2)(x)

# RNNs

x = layers.Bidirectional(layers.LSTM(128, return_sequences=True, dropout=0.25))(x)

x = layers.Bidirectional(layers.LSTM(64, return_sequences=True, dropout=0.25))(x)

# Output layer

x = layers.Dense(

len(char_to_num.get_vocabulary()) + 1, activation="softmax", name="dense2"

)(x)

# ctc loss

output = CTCLayer(name="ctc_loss")(labels, x)

# Model

model = keras.models.Model(

inputs=[input_img, labels], outputs=output, name="omr"

)

# Optimizer

opt = keras.optimizers.Adam()

model.compile(optimizer=opt)

return model

# Model

model = build_model()

model.summary()

08. Train

그럼 이제 epoch를 200으로 설정하고 early stopping은 patience를 10으로 지정하여 학습을 해보도록 하겠습니다.

epochs = 200

early_stopping_patience = 10

early_stopping = keras.callbacks.EarlyStopping(

monitor="val_loss", patience=early_stopping_patience, restore_best_weights=True

)

history = model.fit(

train_dataset,

validation_data=validation_dataset,

epochs=epochs,

callbacks=[early_stopping],

)Epoch 1/200

6/6 [==============================] - 9s 339ms/step - loss: 99.2571 - val_loss: 62.9635

Epoch 2/200

6/6 [==============================] - 0s 49ms/step - loss: 58.3946 - val_loss: 49.3992

Epoch 3/200

6/6 [==============================] - 0s 42ms/step - loss: 51.5517 - val_loss: 46.9572

Epoch 4/200

6/6 [==============================] - 0s 42ms/step - loss: 49.1146 - val_loss: 45.2404

Epoch 5/200

6/6 [==============================] - 0s 40ms/step - loss: 47.8802 - val_loss: 43.4212

Epoch 6/200

6/6 [==============================] - 0s 41ms/step - loss: 45.6561 - val_loss: 41.9743

Epoch 7/200

6/6 [==============================] - 0s 40ms/step - loss: 44.0756 - val_loss: 41.5652

Epoch 8/200

6/6 [==============================] - 0s 39ms/step - loss: 43.6151 - val_loss: 38.8865

Epoch 9/200

6/6 [==============================] - 0s 39ms/step - loss: 41.6590 - val_loss: 39.4570

Epoch 10/200

6/6 [==============================] - 0s 40ms/step - loss: 41.6629 - val_loss: 38.0012

Epoch 11/200

6/6 [==============================] - 0s 40ms/step - loss: 40.3495 - val_loss: 37.4150

Epoch 12/200

6/6 [==============================] - 0s 38ms/step - loss: 39.3751 - val_loss: 38.2025

Epoch 13/200

6/6 [==============================] - 0s 39ms/step - loss: 38.5040 - val_loss: 37.1740

...

Epoch 171/200

6/6 [==============================] - 0s 39ms/step - loss: 2.8438 - val_loss: 2.2756

Epoch 172/200

6/6 [==============================] - 0s 40ms/step - loss: 2.7682 - val_loss: 2.1957

09. Predict

학습된 모델로 Validation Data를 음정으로 출력하기 위한 모델을 만들어줍니다.

출력값을 Decoding하기 위해 decode_batch_predictions라는 함수를 지정합니다.

# Prediction Model

prediction_model = keras.models.Model(

model.get_layer(name="image").input, model.get_layer(name="dense2").output

)

prediction_model.summary()

# Decoding

def decode_batch_predictions(pred):

input_len = np.ones(pred.shape[0]) * pred.shape[1]

results = keras.backend.ctc_decode(pred, input_length=input_len, greedy=True)[0][0][

:, :max_length

]

output_text = []

for res in results:

print(res)

res = tf.strings.join(num_to_char(res), separator=' ').numpy().decode("utf-8").replace('[UNK]', '')

output_text.append(res)

return output_text

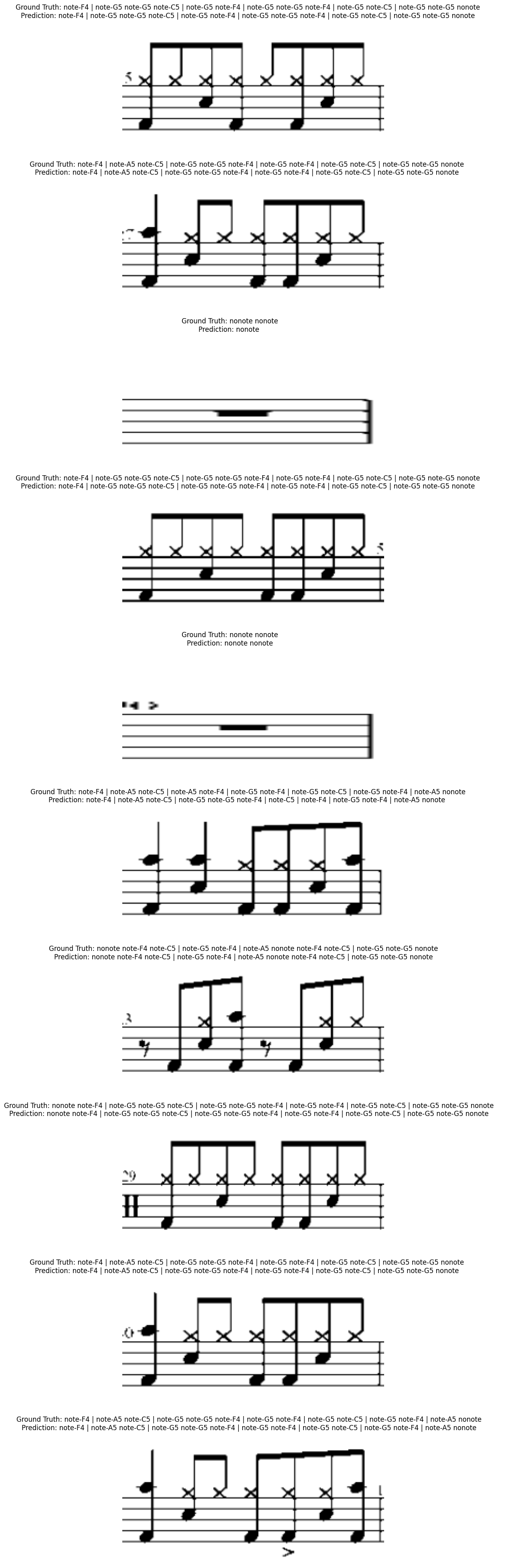

10. 예측 결과 확인

prediction_model에 validation_dataset 배치 한 개를 넣어 시각화해보겠습니다.

# validation dataset에서 하나의 배치를 시각화

for batch in validation_dataset.take(1):

batch_images = batch["image"]

batch_labels = batch["label"]

preds = prediction_model.predict(batch_images)

pred_texts = decode_batch_predictions(preds)

orig_texts = []

for label in batch_labels:

label = tf.strings.join(num_to_char(label), separator=' ').numpy().decode("utf-8").replace('[UNK]', '')

orig_texts.append(label)

_, ax = plt.subplots(10, 1, figsize=(100, 50))

for i in range(len(pred_texts)):

img = (batch_images[i, :, :, 0] * 255).numpy().astype(np.uint8)

img = img.T

title = f"Prediction: {pred_texts[i]}"

ax[i].imshow(img, cmap="gray")

ax[i].set_title(title)

ax[i].axis("off")

plt.show()

해당 글은 음정 구분을 위한 모델입니다.

쉼표 및 마디선 등을 구분하기 위해선 추가 라벨링 작업이 필요합니다.

데이터셋 샘플도 92개밖에 없어, 성능이 좋지 않은데 데이터를 대용량을 생성하여 학습하면 더 좋은 성능을 보일 것으로 예상합니다.

감사합니다.

참고문헌

End-to-End Neural Optical Music Recognition of Monophonic Scores

Optical Music Recognition is a field of research that investigates how to computationally decode music notation from images. Despite the efforts made so far, there are hardly any complete solutions to the problem. In this work, we study the use of neural n

www.mdpi.com

'💻 My Work > 🧠 AI' 카테고리의 다른 글

| [딥러닝] TensorFlow를 사용한 딥러닝 CNN 드럼 소리 분류 (0) | 2023.11.24 |

|---|---|

| [MiniHack] 환경 세팅 (0) | 2023.01.04 |

| [인공지능/혼공머신] 07-1. 인공 신경망 (3) (0) | 2023.01.02 |

| [인공지능/혼공머신] 07-1. 인공 신경망 (2) (0) | 2023.01.01 |

| [인공지능/혼공머신] 07-1. 인공 신경망 (1) (0) | 2022.12.17 |