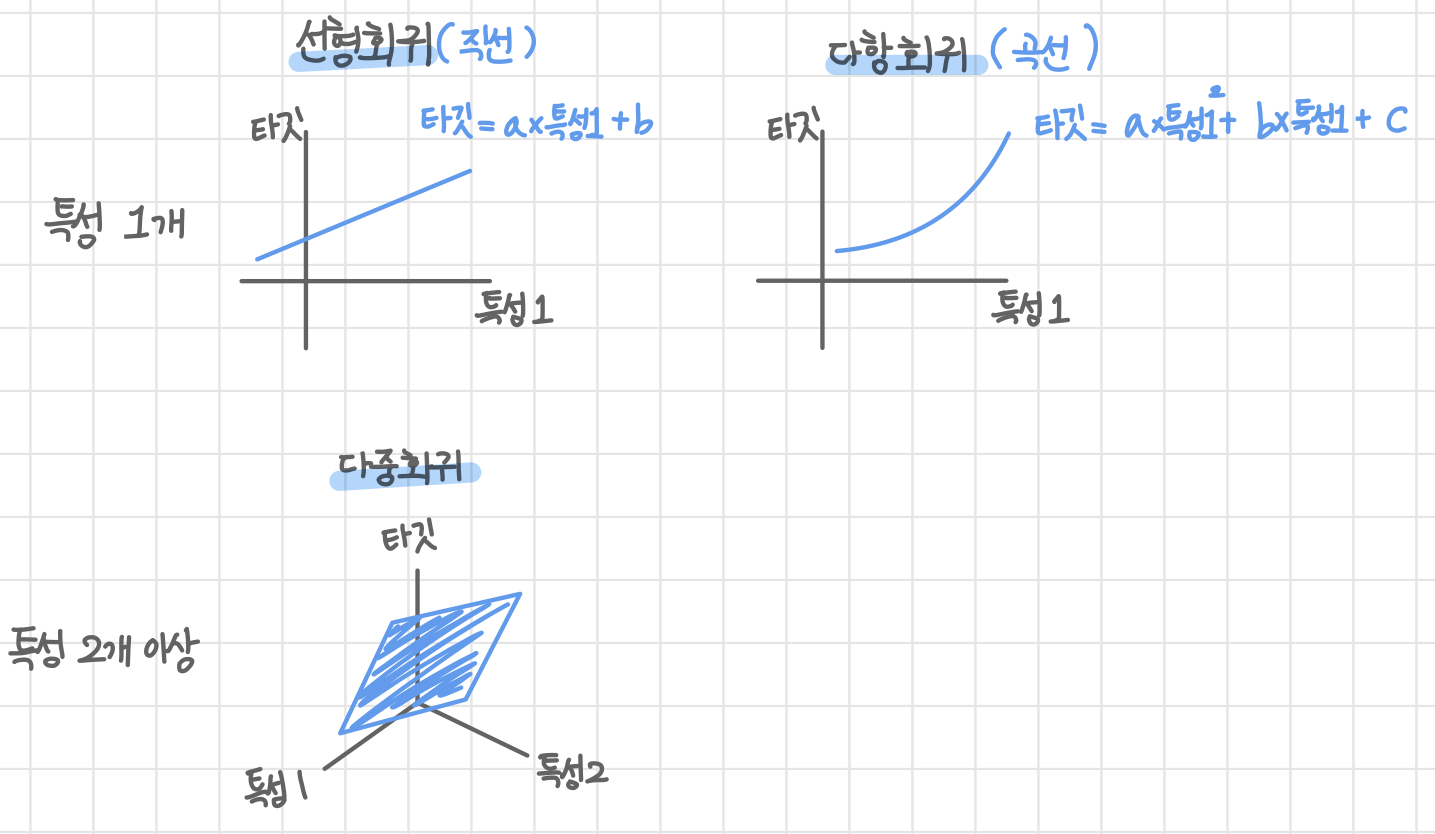

📌다중 회귀 (Multiple Regression)

여러 개의 특성을 사용한 선형 회귀

아래는 선형 회귀에 대해 작성한 글입니다. 😁

[인공지능/혼공머신] 03-2. 선형 회귀

우선 데이터 준비 후 훈련까지.. (03-1 내용 참고) [인공지능/혼공머신] 03-1. k-최근접 이웃 회귀 지도 학습 알고리즘은 분류와 회귀로 나뉩니다. 이 글은 회귀에 대해 작성했습니다. 회귀 임의의 숫

avoc-o-d.tistory.com

선형 회귀에서는 농어의 길이 데이터만 가지고 훈련을 했기 때문에, 여전히 과소적합 문제가 남았습니다.

높이, 두께 등 특성을 많이 사용하여 훈련하면 성능이 좋아질 것으로 생각합니다.

📌특성 공학 (Feature Engineering)

기존의 특성끼리 조합해서 새로운 특성을 뽑아내는 (추가, 발견) 작업

* 머신 러닝은 특성 공학의 영향을 많이 받는 편, 딥러닝은 덜 받는 편

판다스로 데이터 준비

📌 pandas 판다스 : (넘파이 배열 같은) 데이터 분석 라이브러리

📌 데이터프레임 : (판다스의 핵심 객체) 다차원 배열

import pandas as pd

df = pd.read_csv("https://bit.ly/perch_csv_data") # csv 파일을 판다스 데이터프레임으로 읽기

perch_full = df.to_numpy() # 넘파이 배열로 변환📍리마인드 ! 행은 샘플, 열은 특성

잘 가져왔는지 확인하겠습니다.

잘 가져와졌네요~!

타깃값인 농어의 무게 데이터를 준비해준 후,

각 입력값과 타깃값을 훈련 세트와 테스트 세트로 나누어 줍니다.

# http://bit.ly/perch_data

import numpy as np

perch_weight = np.array([5.9, 32.0, 40.0, 51.5, 70.0, 100.0, 78.0, 80.0, 85.0, 85.0, 110.0,

115.0, 125.0, 130.0, 120.0, 120.0, 130.0, 135.0, 110.0, 130.0,

150.0, 145.0, 150.0, 170.0, 225.0, 145.0, 188.0, 180.0, 197.0,

218.0, 300.0, 260.0, 265.0, 250.0, 250.0, 300.0, 320.0, 514.0,

556.0, 840.0, 685.0, 700.0, 700.0, 690.0, 900.0, 650.0, 820.0,

850.0, 900.0, 1015.0, 820.0, 1100.0, 1000.0, 1100.0, 1000.0,

1000.0])

from sklearn.model_selection import train_test_split

# perch_full, perch_weight 을 훈련, 테스트 세트로 나누기

train_input, test_input, train_target, test_target = train_test_split(perch_full, perch_weight, random_state=42)

위 데이터를 조합해서 새로운 특성을 만들겠습니다!

선형회귀 때처럼 직접 만들지 않고, 변환기를 사용하도록 하겠습니다.

📌사이킷런의 변환기 (Transformer)

- 특성을 만들거나 전처리하는 클래스

- fit(), transform() 함수 제공

📌사이킷런의 PolynomialFeatures 클래스 : 변환기

- 각 특성을 제곱한 항을 추가, 특성끼리 서로 곱한 항을 추가✨

- fit() : 새롭게 만들 특성 조합을 찾음

- transform() : 실제로 데이터를 변환

* 변환기는 입력 데이터를 변환하는 데 타깃이 필요하지 않음.

▶️ 모델 클래스와 다르게, 훈련 할 때 입력 데이터만 전달

우선 변환기 사용하는 방법을 알아보겠습니다.

📍 훈련(fit)을 해야, 변환(transform) 이 가능합니다.

from sklearn.preprocessing import PolynomialFeatures

# 2개의 특성(원소) 2, 3 으로 이루어진 샘플 적용해보기

poly = PolynomialFeatures()

# 1(bias), 2, 3, 2**2, 2*3, 3**2

poly.fit([[2, 3]]) # 새롭게 만들 특성 조합을 찾음

print(poly.transform([[2, 3]])) # 실제로 데이터를 변환

2개 밖에 없던 [2, 3] 샘플이 6개의 샘플로 변환되었습니다! 특성이 많아졌어요~

🤔 의문 ? 1은 어떻게 만들어진 특성일까요?

💡 대답 ! 1은 선형 방정식의 절편을 위한 특성입니다.

🤔 의문 ? 왜 있는 걸까요?

💡 대답 ! y = a*x + b*1 => [a, b] * [x, 1] 이렇게 배열끼리의 연산을 할 수 있도록 절편 항이 필요합니다. (사이킷런의 선형 모델이 자동으로 추가해주므로 [1, 2, 3]처럼 특성에 1을 따로 넣어줄 필요 없습니다.)

🤔 의문 ? 우리에게 필요한 걸까?

💡 대답 ! 아니요. 어차피 사이킷런 모델이 절편 항을 무시하기 때문에 필요 없습니다.

📍사이킷런 모델에 include_bias = False 매개변수를 전달해주면 특성에 추가된 절편 항을 무시합니다. (기본값 True)

# (include_bias = False 절편을 위한 항을 무시하라는 의미, 표기 안 해도 사이킷런 모델은 자동으로 무시함)

poly = PolynomialFeatures(include_bias = False)

poly.fit([[2,3]])

print(poly.transform([[2, 3]]))

절편을 위한 항이 제거되고 특성의 제곱과 특성끼리 곱한 항만 추가되었습니다! ✨

이제 적용해보겠습니다!

📍 우리의 특성은 (길이, 높이, 두께) 입니다.

훈련 데이터를 변환기로 변환한 데이터 train_poly를 만들어줍니다.

poly = PolynomialFeatures(include_bias=False)

poly.fit(train_input)

train_poly= poly.transform(train_input) # train_input을 변환한 데이터잘 변환되었는지 배열의 크기를 확인해보면, 잘 된 것 같습니다.

그럼 9개의 특성이 어떻게 생겼는지 확인해보겠습니다.

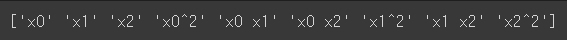

📌 PolynomialFeatures 의 get_feature_names_out()

각각 어떤 입력의 조합으로 만들어졌는지 알려줌

# 9개의 특성이 어떻게 만들어졌는지 확인

print(poly.get_feature_names_out())

- 'x0' : 첫 번째 특성

- 'x1' : 두 번째 특성

- ...

- 'x0^2' : 첫 번째 특성의 제곱

- 'x0 x1' : 첫 번째 특성과 두 번째 특성의 곱

- ...

그럼 테스트 데이터를 변환하겠습니다.

📍 훈련 세트에 적용했던 변환기로 테스트 세트를 변환하는 것을 권장합니다.

fit() 은 만들 특성의 조합을 준비하기만 하고 별도의 통계 값을 구하지 않아서, 테스트 세트를 따로 변환해줘도 되긴 하는데

항상 훈련 세트를 기준으로 테스트 세트를 변환하는 습관을 들이는 것이 좋다고 합니다..!

test_poly = poly.transform(test_input)

다중 회귀 모델 훈련하기

📍 다중 회귀 모델을 훈련하는 것은 선형 회귀 모델을 훈련하는 것과 같습니다. 즉, 여러 개의 특성을 사용하여 선형 회귀를 수행하는 것입니다.

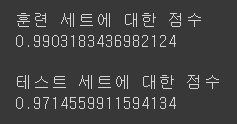

그럼 훈련 후, 훈련 세트와 테스트 세트에 대한 점수를 확인해보겠습니다.

from sklearn.linear_model import LinearRegression

lr = LinearRegression()

# 훈련

lr.fit(train_poly, train_target)

"""

점수 확인

lr.score(train_poly, train_target)

lr.score(test_poly, test_target)

"""

과소적합 문제는 해결한 듯 합니다! 😁😁

그럼 특성을 더 추가하면 어떻게 될 까요? 3, 4, 5 ... 제곱 항을 추가하는 것이죠!!

그럼, 5제곱까지 특성을 만들겠습니다.

📌PolynomialFeatures 의 매개변수 degree : 고차항의 최대 차수를 지정

# 특성을 더 많이 추가

# 3제곱, 4제곱 항을 넣자

# degree 로 최대 차수를 지정

poly = PolynomialFeatures(degree = 5, include_bias = False)

poly.fit(train_input)

train_poly = poly.transform(train_input)

test_poly = poly.transform(test_input)

특성이 55개씩이나 만들어졌습니다!

그럼 훈련을 다시 해보겠습니다.

lr.fit(train_poly, train_target)

"""

점수 확인

lr.score(train_poly, train_target)

lr.score(test_poly, test_target)

"""

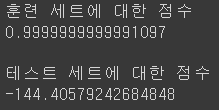

🤔 의문 ? 왜이리 점수가 처참할까요?

💡 대답 ! 특성의 개수를 크게 늘리면 선형 모델은 아주 강력해집니다. 훈련 세트에 대해 거의 완벽하게 학습할 수 있습니다.

=> 즉, 훈련 세트에 과대 적합되므로 테스트 세트에서 처참한 것입니다.

💡 해결 방법 ! 과대 적합을 줄입니다. => 특성을 줄입니다.

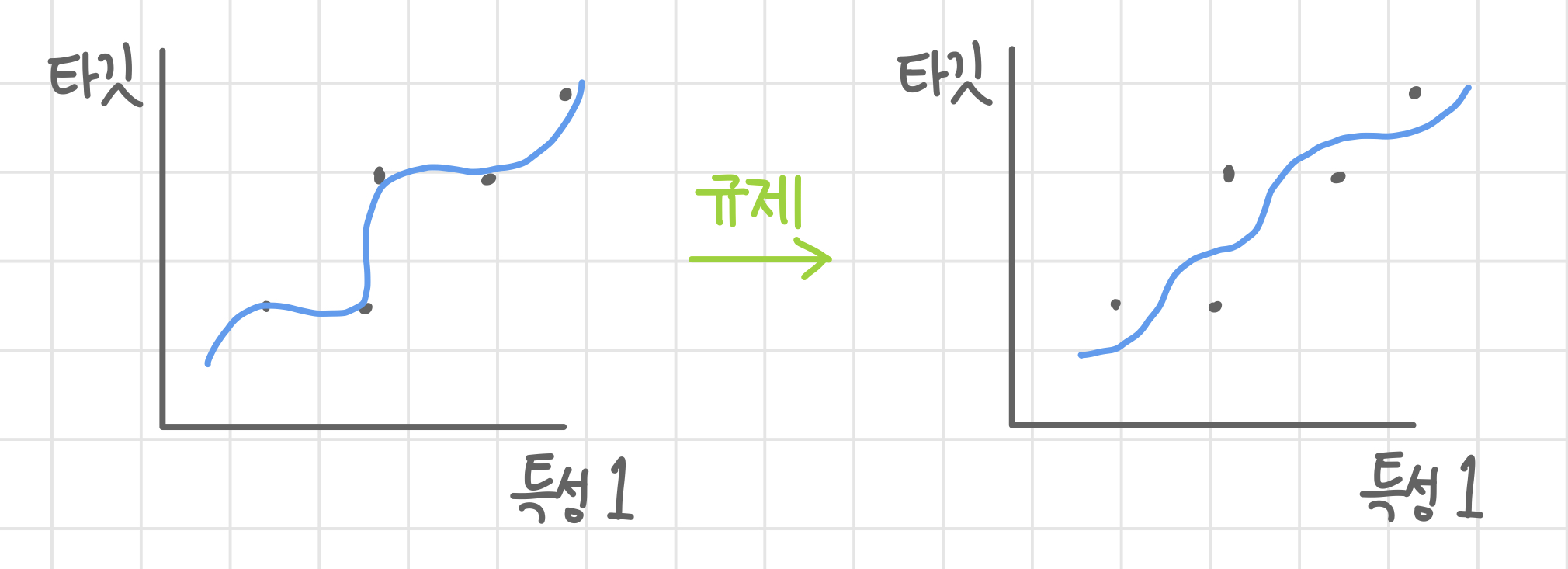

📌 규제

머신러닝 모델이 훈련 세트에 과대적합이 되지 않도록 계수(혹은 기울기, 가중치) 값을 완화하는 것

* 선형 회귀 모델의 경우, 특성에 곱해지는 계수(혹은 기울기, 가중치)의 크기를 작게 만듦

1개의 특성으로 훈련한 모델 예시를 보겠습니다. 아래와 같이 과대적합된 모델을 규제하여 기울기를 줄였더니 보편적인 패턴을 학습하게 되었습니다!

그럼 55개의 특성으로 훈련한 선형 회귀 모델의 계수를 규제해서 과대적합 문제를 해결하겠습니다.

📍리마인드 ! 규제 적용 전에, 꼭 특성의 스케일을 정규화 해줍시다! 스케일을 맞춰주지 않으면, 계수 값의 크기가 서로 많이 다르게 되어 공정하게 제어되지 않기 때문입니다.

🤔 의문? 왜 k-최근접 이웃 분류는 정규화 해주고, LinearRegression 은 정규화 안 해주고 훈련했나?

💡 대답 ! k-최근접 이웃은 스케일이 다르면, 한쪽 특성으로 쏠려있는 데이터 중에서 이웃을 찾기 때문에 제대로 된 이웃을 못 찾음, 근데 사이킷런 혹은 LinearRegression 같은 경우는 특성의 스케일에 영향을 받지 않은 알고리즘(수치적으로 계산)으로 계산하기 때문에 상관 없음

🤔 의문? 그럼 규제할 땐?

💡 대답 ! 규제할 때엔 꼭 정규화를 해줘야 합니다! 규제는 계수(혹은 기울기, 가중치) 값을 작게 만드는 일이기 때문에 특성이 다르면 각각 특성에 곱해지는 기울기도 달라지기 때문에 잘못된 값이 나오게 됩니다. (기울기가 비슷해야 각 특성에 대해 공정하게 제어되니까)

평균과 표준편차를 직접 구하여 특성을 표준점수로 바꾸지 않고,, 클래스를 사용하겠습니다.

📌사이킷런의 StandardScaler 클래스

정규화 변환기, 꼭 훈련 세트로 학습한 변환기를 사용해서 테스트 세트도 변환하기!

from sklearn.preprocessing import StandardScaler

ss = StandardScaler()

ss.fit(train_poly)

train_scaled = ss.transform(train_poly) # 훈련 세트 정규화

test_scaled = ss.transform(test_poly) # 테스트 세트 정규화

데이터에 정규화도 적용했으니, 이제 규제를 가하여 훈련해보겠습니다.

📍릿지와 라쏘

선형 회귀 모델에 규제를 추가한 모델을 릿지와 라쏘라고 합니다.

- 둘 다 규제를 가하여 계수의 크기를 줄이는 건 같지만, 규제를 가하는 방법이 다름

- ✨ 규제의 양을 임의로 조절 가능 : alpha 매개변수

- alpha 크면 규제 강도 세짐 (과대 적합 해결, 과소 적합 유도)

- alpha 작으면 규제 강도 약해짐 (과소 적합 해결, 과대 적합 유도)

🔸 릿지 : 계수를 제곱한 값을 기준으로 규제를 적용 (L2 규제)

🔸 라쏘 : 계수의 절댓값을 기준으로 규제를 적용 (L1 규제)

(계수를 아예 0으로 만들 수도 있음 -> 그런데도 불구하고 사용하는 이유는 밑에 작성했습니다. 😁)

📌 릿지 회귀

📌 사이킷런의 Ridge 클래스

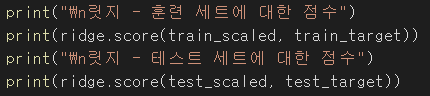

📍그냥 훈련

from sklearn.linear_model import Ridge

ridge = Ridge()

# 훈련

ridge.fit(train_scaled, train_target)

과대적합 되지 않고, 좋은 성능을 냅니다!

그럼, alpha 값을 임의로 지정하여 더욱 좋은 성능을 내보도록 하겠습니다.

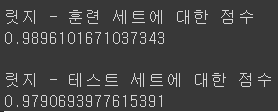

📍alpha 값 찾기

적절한 alpha 값을 찾는 방법 중 하나는, alpha 값에 대한 R²(결정 게수) 값의 그래프를 그려보는 것입니다.

즉, 훈련 세트와 테스트 세트의 점수가 가장 가까운 지점이 최적의 alpha !

alpha 값을 0.001 ~ 100 10배씩 늘려 그래프를 그립니다.

import matplotlib.pyplot as plt

train_score=[]

test_score=[]

# 알파 0.001~100 10씩 늘려가며 릿지 회귀 모델 훈련

alpha_list = [0.001, 0.01, 0.1, 1, 10, 100]

for alpha in alpha_list :

#릿지 모델 생성

ridge = Ridge(alpha=alpha)

# 훈련

ridge.fit(train_scaled, train_target)

# 훈련 점수와 테스트 점수 저장

train_score.append(ridge.score(train_scaled, train_target))

test_score.append(ridge.score(test_scaled, test_target))

# 그래프 그리기

# 10 배씩 늘렸기 떄문에 왼쪽이 너무 촘촘해져서, 늘리자! => 로그함수로 바꿔 지수로 표현 => 0.001 -> -3

plt.plot(np.log10(alpha_list), train_score)

plt.plot(np.log10(alpha_list), test_score)

plt.xlabel("alpha")

plt.ylabel("R^2")

plt.show()

그래프로부터 가장 적절한 alpha 값은 -1입니다. 즉, 10^(-1) = 0.1 입니다!

alpha 를 0.1 로 해서 다시 훈련 하겠습니다.

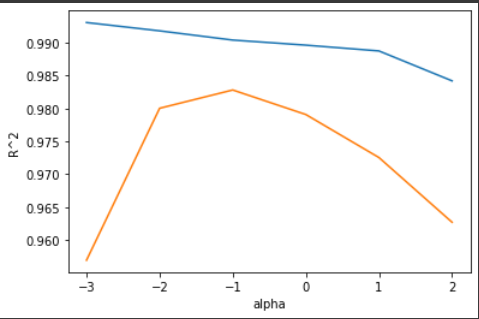

📍적절한 alpha 값으로 훈련

# 0.1 일 때가 가장 성능 굿👍

ridge = Ridge(alpha = 0.1)

# 훈련

ridge.fit(train_scaled, train_target)

굿👍👍

📌 라쏘 회귀

📌 사이킷런의 Lasso 클래스

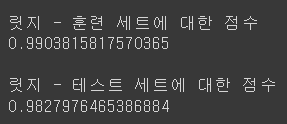

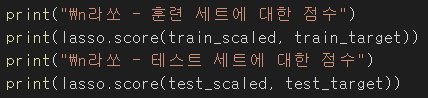

📍그냥 훈련

from sklearn.linear_model import Lasso

lasso = Lasso()

# 훈련

lasso.fit(train_scaled, train_target)

점수 좋습니다. 😁

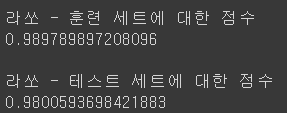

📍alpha 값 찾기

import matplotlib.pyplot as plt

train_score=[]

test_score=[]

# 알파 0.001 ~ 100 10씩 늘려가며 라쏘 회귀 모델 훈련

alpha_list = [0.001, 0.01, 0.1, 1, 10, 100]

for alpha in alpha_list :

#라쏘 모델 생성

# max_iter=10000 : 라쏘 모델 훈련 시, 최적의 계수를 찾기 위해 반복적인 계산을 수행하는데, 지정한 반복 횟수가 부족할 때 경고 뜸 이를 해결

lasso = Lasso(alpha=alpha, max_iter=10000)

# 훈련

lasso.fit(train_scaled, train_target)

# 훈련 점수와 테스트 점수 저장

train_score.append(lasso.score(train_scaled, train_target))

test_score.append(lasso.score(test_scaled, test_target))

# 그래프 그리기

# 10 배씩 늘렸기 떄문에 왼쪽이 너무 촘촘해져서, 늘리자! => 로그함수로 바꿔 지수로 표현 => 0.001 -> -3

plt.plot(np.log10(alpha_list), train_score)

plt.plot(np.log10(alpha_list), test_score)

plt.xlabel("alpha")

plt.ylabel("R^2")

plt.show()

그래프로부터 가장 적절한 alpha 값은 1입니다. 즉, 10^(1) = 10 입니다!

alpha 를 10 으로 해서 다시 훈련 하겠습니다.

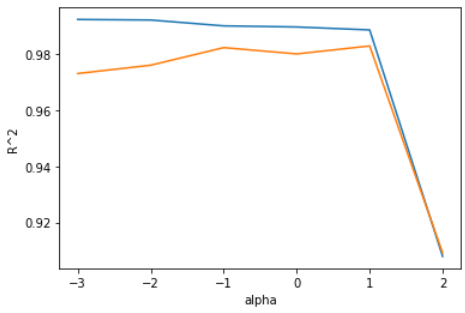

📍적절한 alpha 값으로 훈련

# 10 일 때가 가장 적합!!

lasso = Lasso(alpha = 10)

# 훈련

lasso.fit(train_scaled, train_target)

아주 좋습니다~!

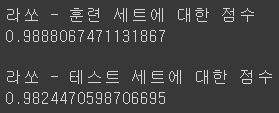

✨ 속성 살펴보기

📌 coef_ : 계수

# 계수가 0인 배열의 개수 반환

print(np.sum(lasso.coef_ == 0))라쏘는 계수가 0이 될 수 있다고 했습니다.

그럼 55개 특성 중 몇 개씩이나 계수가 0이 되었을지 확인해보겠습니다.

55개 중에 40개씩이나 계수가 0이 되었네요! 즉, 라쏘 모델이 사용한 특성은 55개 중에 15개밖에 되지 않는다는 것입니다.

이러한 특징으로, 라쏘 모델을 유용한 특성을 골라내는 용도로는 사용할 수 있습니다.

'💻 My Work > 🧠 AI' 카테고리의 다른 글

| [인공지능/혼공머신] 04-2. 확률적 경사 하강법 (1) (0) | 2022.12.09 |

|---|---|

| [인공지능/혼공머신] 04-1. 로지스틱 회귀 (2) | 2022.12.08 |

| [인공지능/혼공머신] 03-2. 선형 회귀 (0) | 2022.12.05 |

| [인공지능/혼공머신] 03-1. k-최근접 이웃 회귀 (0) | 2022.12.05 |

| [인공지능/혼공머신] 02-2. 데이터 전처리 (2) | 2022.12.04 |